How Elasticsearch calculates significant terms

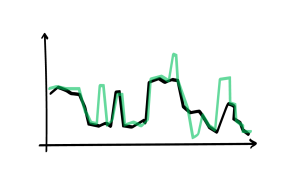

Many of you who use Elasticsearch may have used the significant terms aggregation and been intrigued by this example of fast and simple word analysis. The details and mechanism behind this aggregation tends to be kept rather vague however and couched in terms like “magic” and the commonly uncommon. This is unfortunate since developing informative analyses based on this aggregation requires some adaptation to the underlying documents especially in the face of less structured text. Significant terms seems especially susceptible to garbage in – garbage out effects and developing a robust analysis requires some understanding of the underlying data. In this blog post we will take a look at the default relevance score used by the significance terms aggregation, the mysteriously named JLH score, as it is implemented in Elasticsearch 1.5. This score is especially developed for this aggregation and experience shows that it tends to be the most effective one available in Elasticsearch at this point.

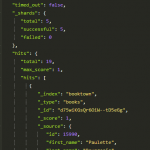

The JLH relevance scoring function is not given in the documentation. A quick dive into the code however and we find the following scoring function.

Here the is the frequency of the term in the foreground (or query) document set, while

is the term frequency in the background document set which by default is the whole index.

Expanding the formula gives us the following which is quadratic in .

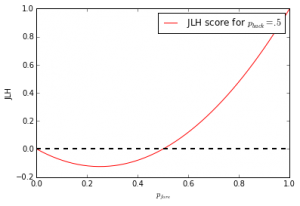

By keeping fixed and keeping in mind that both it and

is positive we get the following function plot. Note that

is unnaturally large for illustration purposes.

On the face of it this looks bad for a scoring function. It can be undesirable that it changes sign, but more troublesome is the fact that this function is not monotonically increasing.

The gradient of the function:

Setting the gradient to zero we see by looking at the second coordinate that the JLH does not have a minimum, but approaches it when and

approaches zero where the function is undefined. While the second coordinate is always positive, the first coordinate shows us where the function is not increasing.

Furtunately the decreasing part of the function is in an area where and the JLH score explicitly defined as zero. By symmetry of the square around the minimum of the first coordinate of the gradient around

we also see that the entire area where the score is below zero is in this region.

With this it seems sensible to just drop the linear term of the JLH score and just use the quadratic part. This will result in the same ranking with a slightly less steep increase in score as increases.

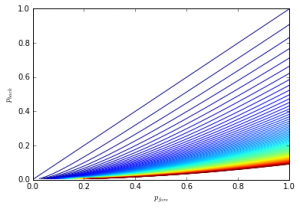

Looking at the level sets for the JLH score there is a quadratic relationship between the and

. Solving for a fixed level

we get:

Where the negative part is outside of function definition area.

This is far easier to see in the simplified formula.

An increase in must be offset by approximately a square root increase in

to retain the same score.

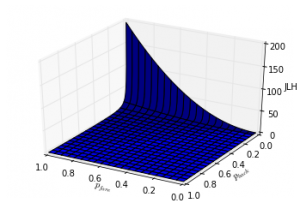

As we see the score increases sharply as increases in a quadratic manner against

. As

becomes small compared to

the growth goes from linear in

to squared.

Finally a 3D plot of the score function.

So what can we take away from all this? I think the main practical consideration is the squared relationship between and

which means once there is significant difference between the two the

will dominate the score ranking. The

factor primarily makes the score sensitive when this factor is small and for reasonable similar

the

decides the ranking. There are some obvious consequences from this which would be interesting to explore in real data. First that you would like to have a large background document set if you want more fine grained sensitivity to background frequency. Second, foreground frequencies can dominate the score to such an extent that peculiarities of the implementation may show up in the significant terms ranking, which we will look at in more detail as we try to apply the significant terms aggregation to single documents.

The results and visualizations in this blog post is also available as an iPython notebook.

Nice write up. I plotted some of the other scoring heuristics here: https://twitter.com/elasticmark/status/513320986956292096

For me, the choice of scoring function is essentially a question of emphasis between precision and recall. If I analyse the results of a search for “Bill Gates” do I suggest “Microsoft” or “bgC3″ as the most significant terms? The rarer bgc3 is more closely allied (so high precision) but perhaps of less practical use due to poor recall. The inverse is true of Microsoft.

Putting aside the question of precision/recall emphasis, I found the following features do the most to improve significance suggestions on text:

1) Removal of duplicate/near duplicate text in the results being analysed

2) Discovery of phrases

3) Analysing top-matching samples not all content (see the new “sampler” agg)

4) “Chunking” documents e.g. into sentence-docs if the foreground sample of docs is low in number.

Additionally, text analysis in elasticsearch should ideally not be reliant on field data to avoid memory issues.

These approaches are a little detailed to get into here but are important parts of improving elasticsearch’s significance algos on free text.

The mystery of “JLH” is my daughter’s initials :)

Cheers,

Mark

Ayup, thanks letting us in on the name and mentioning the new sampler aggregation :) That looks quite interesting.

The precision/recall viewpoint is indeed illuminating. I tend to shy away from it since it feels tied to specific task or goal. I’m not really sure I would call bgC3 more precise than Microsoft in this case, although bgC3 probably points to a more limited set of documents. It’s all in the context I guess.

If you can structure your data in a manner that supports your task that is certainly a good idea. Chunking up words I feel is something that has to be done with more care. Pure collocational or n-gram approaches can end up emphasizing turns of phrase without interesting content, you might call them phrase stopwords. I’ld be more inclined to extract meaningful multiword units such as verb complexes or entities.

Doing sentence/paragraph docs incurs indexing costs and seem to me to be a poor mans estimate of the term-frequency. It’s certainly a practical solution but it would nice if there was an option for using the TF in the significant terms aggregation for foreground sets where it is feasible.

Anyhow, thanks for putting cool stuff like this into Elasticsearch and hope you’ll find ways to make significant terms into an even more precise and flexible tool than it is today.

Snakkes!

André