Some CSV import tricks in Neo4j

The CSV file import facility in Neo4J is interesting in that it allows you to run Cypher queries iteratively over your dataset. This gives us a lot of flexibility and relieves us of the need for transforming our data to a Neo4J specific format. We can export tables with for example foreign keys to other tables and reconstruct our relationships during import. The key to doing this successfully is adding nodes and relationships on demand with the MERGE clause.

There are some quite good resources on CSV import in Neo4J already. Instead of repeating it we’ll just drop the linkshere: http://neo4j.com/docs/stable/queryn-load-csv.htmlhttp://neo4j.com/docs/stable/query-load-csv.html

http://jexp.de/blog/2014/06/load-csv-into-neo4j-quickly-and-successfully/

http://blog.graphenedb.com/blog/2015/01/13/importing-data-into-neo4j-via-csv/

http://jexp.de/blog/2014/06/using-load-csv-to-import-git-history-into-neo4j/

To recap the main points:

- Set up constraints and indexes before importing. Without them the import queries will be very slow

- Use USING PERIODIC COMMIT to speed up the importing process.

- Make sure your heap is big enough, especially if committing multiple queries. It is recommended that the entire dataset fits in the cache (see http://neo4j.com/docs/stable/configuration-caches.html#_file_buffer_cache).

- Values are read as strings and must be converted before insertion.

- You can provide defaults with the COALESCE clause.

- Use MERGE and MATCH to add data duplicated across tables or in denormalized tables.

With these main points in mind we’ll look at some examples and tricks.

MERGE instead of CREATE UNIQUE

In general it seems better to use MERGE rather than CREATE UNIQUE. This last clause tend to have some surprising corner cases, while MERGE on the whole is behaves for more predictably. It is important to remember though that MERGE will create any node that in a pattern if the whole pattern doesn’t match if constraints are not violated. Often that is not what you want, and instead you need to use MATCH to find existing part of a pattern and call MERGE matching just the part of the pattern you intend to create.

In this example we don’t have uniqueness constraints on the user property and matching the in the MERGE would create duplicate :USER nodes.

USING PERIODIC COMMIT 1000

LOAD CSV WITH HEADERS FROM “file://blabla.csv” AS csvLine

MATCH (uf :USER { twitter_name: toInt(csvLine.follower_name) })

MATCH (u :USER { twitter_name: toInt(csvLine.twitter_name) })

MERGE uf -[:FOLLOWS]-> u

Skipping incomplete rows

This is rather straightforward with a WHERE keyword, but is made more complicated than it needs to because it has to be attached to clause. Most straightforward way to introduce a clause is with the WITH statement, but as WITH separates parts of the query we need to parse the whole CSV line here and bind it in the clause. Accessing the CSV line object won’t work within the clause.

USING PERIODIC COMMIT 1000

LOAD CSV WITH HEADERS FROM “file://blabla.csv” AS csvLine

WITH toInt(csvLine.twitterid) as twitterid, toInt(csvLine.seat) as seat, csvLine.firstname as firstname,

csvLine.lastname as lastname, csvLine.party as party, csvLine.region as region, csvLine.type as type

WHERE twitterid IS NOT NULL

MERGE (u :USER { seat: seat, firstname: firstname, lastname: lastname, party: party, region: region,

type: type, twitterid: twitterid })

Accumulating relationship strength

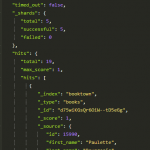

In many cases the rows in a CSV file describes a list of relationships where duplicate lines describes a recurring and stronger relationship. Here we’d rather not create multiple links and have to count the link cardinality to get the strength between two nodes, but rather accumulate the repeated relationships in a property on the relationship. This is easy using the ON MATCH and ON CREATE part of a MERGE clause.

USING PERIODIC COMMIT 1000

LOAD CSV WITH HEADERS FROM “file://blabla.csv” AS csvLine

MATCH (u :USER { twitterid: toInt(csvLine.twitterid) })

MERGE (h :HASHTAG { text: csvLine.text})

MERGE u -[t :TWEETED]-> h

ON MATCH SET t.count = t.count + 1 ON CREATE SET t.count = 1

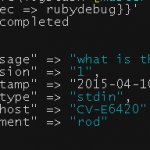

Hi, thanks a lot for the great blog post, I just wanted to comment on two things:

1. Use MERGE always with one property only if you want to avoid duplicates (otherwise use CREATE). User ON CREATE SET .. to set the other properties, potentially also with ON CREATE SET n += csvLine

2. You don’t have to decompose csvLine for WITH, this works perfectly fine, the use of WITH is currently still required for WHERE, but the decomposition is optional

but as WITH separates parts of the query we need to parse the whole CSV line here and bind it in the clause. Accessing the CSV line object won’t work within the clause.

USING PERIODIC COMMIT 1000

LOAD CSV WITH HEADERS FROM “file://blabla.csv” AS csvLine

WITH toInt(csvLine.twitterid) as twitterid, csvLine

WHERE twitterid IS NOT NULL

MERGE (u :USER { twitterid: twitterid }) ON CREATE SET

u.seat=toInt(csvLine.seat), u.firstname=csvLine.first name, u.lastname=csvLine.lastname, u.party=csvLine.party, u.region=csvLine.region, u.type = csvLine.type

….

or

ON CREATE SET u += csvLine, u.seat=toInt(csvLine.seat)

Thanks for the clarifications. Your usage of WITH with just csvLine is a lot easier in the eyes.