FAST ESP Advanced Linguistics — Part 1/3

This series of blog posts covers how to set up FAST ESP special tokenization, character normalization, phonetic normalization and lemmatization.

Part 2: Phonetic Normalization

Part 3: Lemmatization

Tokenization and Character Normalization

Key info

| index profile: | ~/esp/index-profiles/index-profile.xml |

| config: | ~/esp/etc/tokenizer/tokenization.xml |

| content pipeline stages: | Tokenizer(webcluster) (automatically generated) |

How it works

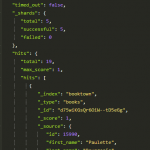

Tokenization is the process of splitting a string into searchable tokens. This is done both to create the index and to identify which parts of the query to consider a token when searching. (E.g. one tokenization could consider “13.2″ one token, while another could consider it “13″ and “2″, so that a search for “13″ alone

would produce a hit.)

Character Normalization is a transformation of certain characters into certain codes. (E.g. transforming “ø”, “ö” and “oe” into “ø”, so that both “ørjan” and “örjan” would produce hits in the other.) Character Normalization can be set up using content and query pipeline stages or through the tokenizer. It does not alter the available text in the index field. (I.e. name:”andré” still looks like that even though it is normalized and searchable as “andre”.)

Configuration

The character normalization is set up to do lowercasing and accent removal and a few safe normalizations such as “ï” to “i”. Most notably it normalizes “aa” into “å”. The earlier translation of Swedish into Norwegian characters has been removed.

If we want this back then we will have to

- Set up the normalizations in the skip-set in tokenization.xml.

- Alter all lemmatization dictionaries to consider the new normalizations and recompile them.

- Alter the file

~/esp/etc/character_normalization.xmlaccordingly. This is used by the normalizing completion matchers and the generation script for their automatons. Then regenerate all those automatons.

Document processing

The content pipeline needs the stage Tokenizer(webcluster") in order to do tokenization and character normalization. (Lemmatization should come after this stage.)

Default tokenization is “delimiters”, but in order to activate the special tokenization in tokenization.xml (such as “aa” => “å”), one needs to set the attribute tokenize="auto" on each field in the index profile. The composite fields should also have query-tokenize="auto" set to activate the tokenizations set on each field.

Character normalization is carried out regardless of what kind of tokenization is specified.

Query tokenization

The query transformation configuration file is qtf_config.xml. The pipeline in use is scopesearch. It should contain the entry

<instance-ref name="tokenize" critical="1"/>

After editing qtf_config.xml, issue this command to activate the changes:

view-admin -m refresh

Usage

Simply search as normal. Note that punctuations are considered delimiters, so unless special tokenization is set up for certain fields (requiring at least non-default content pipeline Tokenizer stage(s)), a search for the “13″ against a string field containing “13.2″ would require a field with boundary-match=”yes” and an FQL using the equals operator instead of “string”. (That is, unless you aslo want a hit in “13.1″.)

Beware!

Changes to the index profile are reflected in the document processing and query pipelines regarding which fields to apply the tokenization (etc) to. So make sure you do not overwrite these changes from deployment scripts (such as espdeploy) without first applying the changes to the deployment source tree.