How To: Debug and log FAST Search pipeline extensibility stages in Visual Studio

One of the most powerful features with FS4SP is the ability to do work on the indexed data before it’s made searchable. This can include extracting location names from the documents being indexed or enriching the data from external sources by adding financial data to a customers CRM record based on a lookup key. Only your imagination limits the possibilities.

As the extensibility demo code seems to be missing from MSDN I decided to create a stage which counts the number of words in the crawled document. There is a special crawled property set which contains a field named “body” which contains the extracted text of the crawled item, “data” which is the binary content of the source document in base64 encoding, and “url” which is the link used when displaying results. My stage will use the body field.

First I created a new property set for the crawled property I will emit from my program. I could have used one of the existing ones, but I find it easier to have my custom properties in a separate location. I name the property set “mAdcOW” and assign it an arbitrary guid. You can get a GUID in PowerShell with the following command:

[guid]::NewGuid()

The PowerShell command to create a new property set/category with my chosen guid looks like this:

New-FASTSearchMetadataCategory -Name “mAdcOW” -Propset FA585F53-2679-48d9-976D-9CE62E7E19B7

The guid is important as it is later used in the pipeline extensibility configuration. Default, the property set will add newly discovered properties as they are seen during the crawl. This saves us the work of manually creating the crawled properties we are going to be using.

For maintainability I create my own folder below the FASTSearch root for my module named C:\FASTSearch\pipelinemodules. Check the %FASTSEARCH% environmental variable for your actual FS4SP location.

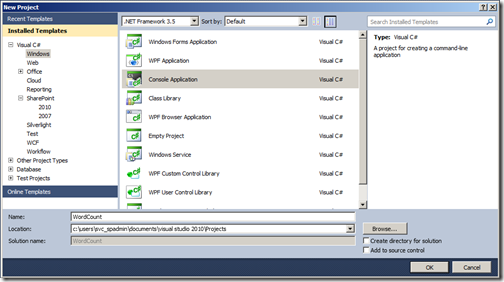

Now over to the actual pipeline stage. In Visual Studio create a new “Console Application”. I give it the name “WordCount”.

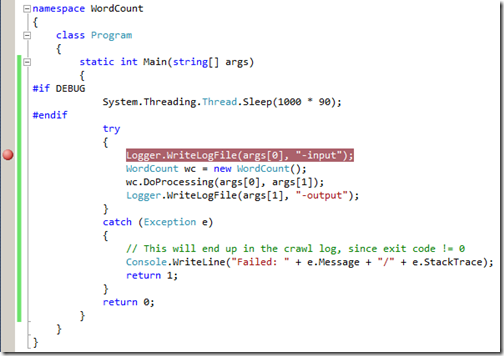

In Program.cs I have the following code:

|

1 |

<span style="color: #0000ff;">private</span> <span style="color: #0000ff;">static</span> <span style="color: #0000ff;">int</span> Main(<span style="color: #0000ff;">string</span>[] args){<span style="color: #cc6633;">#if</span> DEBUG Thread.Sleep(1000 * 90);<span style="color: #cc6633;">#endif</span> <span style="color: #0000ff;">try</span> { Logger.WriteLogFile(args[0], <span style="color: #006080;">"input"</span>); WordCount wc = <span style="color: #0000ff;">new</span> WordCount(); wc.DoProcessing(args[0], args[1]); Logger.WriteLogFile(args[1], <span style="color: #006080;">"output"</span>); } <span style="color: #0000ff;">catch</span> (Exception e) { <span style="color: #008000;">// This will end up in the crawl log, since exit code != 0</span> Console.WriteLine(<span style="color: #006080;">"Failed: "</span> + e.Message + <span style="color: #006080;">"/"</span> + e.StackTrace); <span style="color: #0000ff;">return</span> 1; } <span style="color: #0000ff;">return</span> 0;} |

Take notice of the #if DEBUG part. The pause is there in order to have time to attach the Visual Studio Debugger. I did try to use

|

1 |

System.Diagnostics.Debugger.Break() |

but the context in which the pipeline stage is run under does not have access to invoke the debugger.

You might also note the Logger.WriteLog lines in the Main function. This is something I got from an MSDN blog entry, and which I modified a bit for restructuring the code. I also added a configuration key to turn logging on/off and a key for specifying the folder name of the log files. An important piece of information from the blog entry is that you only have write access to the C:\Users\username\AppData\LocalLow folder. Instead of hard coding the folder name, I added code which uses the Win32 API to get the correct folder name in case it resides on another drive or folder than “Users”.

DoProcessing takes two arguments, the input file to read, and the output file to write. These are passed in from the document processor pipeline, and is how custom stages work. They read in an xml file with the data to process, and write out a new one with the new/modified data.

The code which counts the words uses the XDocument class and linq to xml for reading and writing the input and output data. At the top you see a declaration for the guid I used for my property set, and a guid for the special crawled propery set with the body property. These are the same as in the pipelineextensibility.xml configuration file. In short we select what was specified in the configuration file.

|

1 2 3 4 5 6 7 8 9 |

<span style="color: #0000ff;">internal</span> <span style="color: #0000ff;">class</span> WordCount{ <span style="color: #008000;">// this propset contains url/body/data - http://msdn.microsoft.com/en-us/library/ff795815.aspx</span> <span style="color: #0000ff;">private</span> <span style="color: #0000ff;">static</span> <span style="color: #0000ff;">readonly</span> Guid CrawledCategoryFAST = <span style="color: #0000ff;">new</span> Guid(<span style="color: #006080;">"11280615-f653-448f-8ed8-2915008789f2"</span>); <span style="color: #0000ff;">private</span> <span style="color: #0000ff;">static</span> <span style="color: #0000ff;">readonly</span> Guid CrawledCategorymAdcOW = <span style="color: #0000ff;">new</span> Guid(<span style="color: #006080;">"fa585f53-2679-48d9-976d-9ce62e7e19b7"</span>); <span style="color: #0000ff;">private</span> <span style="color: #0000ff;">static</span> <span style="color: #0000ff;">readonly</span> Regex WordSplit = <span style="color: #0000ff;">new</span> Regex(<span style="color: #006080;">@"\s+"</span>, RegexOptions.Compiled); <span style="color: #008000;">// Actual processing</span> <span style="color: #0000ff;">public</span> <span style="color: #0000ff;">void</span> DoProcessing(<span style="color: #0000ff;">string</span> inputFile, <span style="color: #0000ff;">string</span> outputFile) { XDocument inputDoc = XDocument.Load(inputFile); <span style="color: #008000;">// Fetch the content type property from the input item</span> var res = from cp <span style="color: #0000ff;">in</span> inputDoc.Descendants(<span style="color: #006080;">"CrawledProperty"</span>) <span style="color: #0000ff;">where</span> <span style="color: #0000ff;">new</span> Guid(cp.Attribute(<span style="color: #006080;">"propertySet"</span>).Value).Equals(CrawledCategoryFAST) && cp.Attribute(<span style="color: #006080;">"propertyName"</span>).Value == <span style="color: #006080;">"body"</span> && cp.Attribute(<span style="color: #006080;">"varType"</span>).Value == <span style="color: #006080;">"31"</span> select cp.Value; <span style="color: #008000;">// Count the number of words separated by white space</span> <span style="color: #0000ff;">int</span> wordCount = res.Sum(s => WordSplit.Split(s).Length); <span style="color: #008000;">// Create the output item</span> XElement outputElement = <span style="color: #0000ff;">new</span> XElement(<span style="color: #006080;">"Document"</span>); <span style="color: #0000ff;">if</span> (res.Count() > 0 && res.First().Length > 0) { outputElement.Add( <span style="color: #0000ff;">new</span> XElement(<span style="color: #006080;">"CrawledProperty"</span>, <span style="color: #0000ff;">new</span> XAttribute(<span style="color: #006080;">"propertySet"</span>, CrawledCategorymAdcOW), <span style="color: #0000ff;">new</span> XAttribute(<span style="color: #006080;">"propertyName"</span>, <span style="color: #006080;">"wordcount"</span>), <span style="color: #0000ff;">new</span> XAttribute(<span style="color: #006080;">"varType"</span>, 20), wordCount) <span style="color: #008000;">// 20 = integer</span> ); } outputElement.Save(outputFile); }} |

After compiling a debug build of the program I copy it over to the folder previously created, C:\FASTSearch\pipelinemodules.

Default an FS4SP installation has 4 document processors running.

nctrl status

Document Processor procserver_1 11644 Running

Document Processor procserver_2 8224 Running

Document Processor procserver_3 5452 Running

Document Processor procserver_4 5920 Running

This means it will process 4 items in parallel. In order to ease debugging we turn off all but one.

nctrl stop procserver_2 procserver_3 procserver_4

(Remember to start them once you are done testing if this is a shared or production environment. Replace “stop” with “start” in the above command.)

Next I modify C:\FASTSearch\etc\pipelineextensibility.xml and add my word count stage.

|

1 |

<span style="color: #0000ff;"><</span><span style="color: #800000;">PipelineExtensibility</span><span style="color: #0000ff;">></span> <span style="color: #0000ff;"><</span><span style="color: #800000;">Run</span> <span style="color: #ff0000;">command</span><span style="color: #0000ff;">="C:\FASTSearch\pipelinemodules\WordCount.exe %(input)s %(output)s"</span><span style="color: #0000ff;">></span> <span style="color: #0000ff;"><</span><span style="color: #800000;">Input</span><span style="color: #0000ff;">></span> <span style="color: #0000ff;"><</span><span style="color: #800000;">CrawledProperty</span> <span style="color: #ff0000;">propertySet</span><span style="color: #0000ff;">="11280615-f653-448f-8ed8-2915008789f2"</span> <span style="color: #ff0000;">varType</span><span style="color: #0000ff;">="31"</span> <span style="color: #ff0000;">propertyName</span><span style="color: #0000ff;">="body"</span><span style="color: #0000ff;">/></span> <span style="color: #008000;"><!-- Included for debugging/traceability purposes --></span> <span style="color: #0000ff;"><</span><span style="color: #800000;">CrawledProperty</span> <span style="color: #ff0000;">propertySet</span><span style="color: #0000ff;">="11280615-f653-448f-8ed8-2915008789f2"</span> <span style="color: #ff0000;">varType</span><span style="color: #0000ff;">="31"</span> <span style="color: #ff0000;">propertyName</span><span style="color: #0000ff;">="url"</span><span style="color: #0000ff;">/></span> <span style="color: #0000ff;"></</span><span style="color: #800000;">Input</span><span style="color: #0000ff;">></span> <span style="color: #0000ff;"><</span><span style="color: #800000;">Output</span><span style="color: #0000ff;">></span> <span style="color: #0000ff;"><</span><span style="color: #800000;">CrawledProperty</span> <span style="color: #ff0000;">propertySet</span><span style="color: #0000ff;">="fa585f53-2679-48d9-976d-9ce62e7e19b7"</span> <span style="color: #ff0000;">varType</span><span style="color: #0000ff;">="20"</span> <span style="color: #ff0000;">propertyName</span><span style="color: #0000ff;">="wordcount"</span><span style="color: #0000ff;">/></span> <span style="color: #0000ff;"></</span><span style="color: #800000;">Output</span><span style="color: #0000ff;">></span> <span style="color: #0000ff;"></</span><span style="color: #800000;">Run</span><span style="color: #0000ff;">></span><span style="color: #0000ff;"></</span><span style="color: #800000;">PipelineExtensibility</span><span style="color: #0000ff;">></span> |

After saving the file I reset the document processors in order to read the updated configuration.

psctrl reset

I have now deployed a new pipeline stage ready for testing. On the FAST Content SSA in SharePoint Administration I start a new full crawl for my test source.

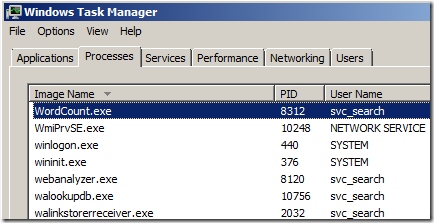

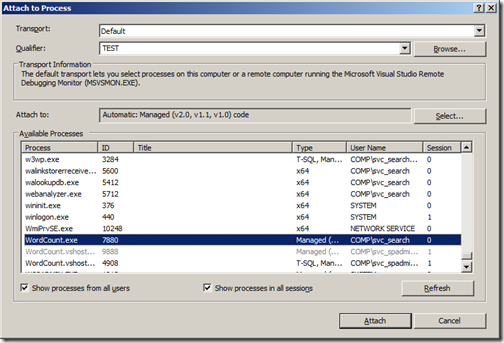

Start Windows Task Manager, check “Show processes from all users”, and wait for an instance of the program to appear.

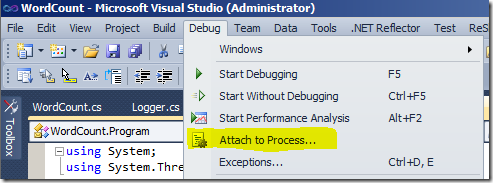

Switch back to Visual Studio and set a break point in the code below the sleep statement.

Go to the “Debug” menu and choose “Attach to Process”

Locate the process and click “Attach”. You might have to check “Show processes from all users” her as well for it to be displayed.

Once the sleep statement completes you should be able to step thru the code like you normally would in Visual Studio.

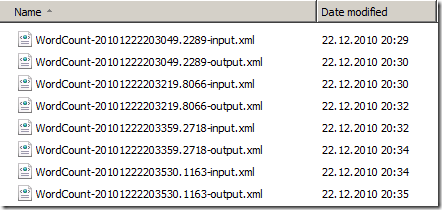

If logging is enabled in the configuration file you will see files appearing in the logging folder

where the input files have the url and body fields going in, and the output the wordcount field going out, as specified in the configuration file.

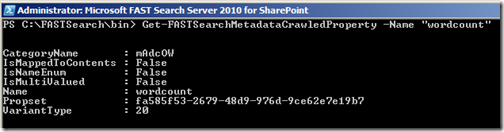

My crawled property “wordcount” has also been added during the crawl.

I create a new managed property which can be used in the search result page, and map the crawled property to it. This can also be done in the Admin UI instead of with PowerShell.

|

1 |

$managedproperty = New-FASTSearchMetadataManagedProperty -Name wordcount -Type 2 -Description <span style="color: #006080;">"Number of words"</span>$wordcount = Get-FASTSearchMetadataCrawledProperty -Name wordcountNew-FASTSearchMetadataCrawledPropertyMapping -ManagedProperty $managedproperty -CrawledProperty $wordcount |

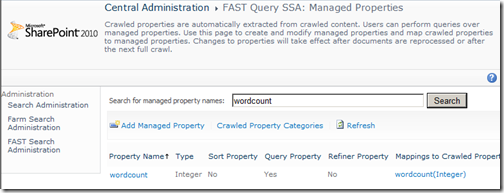

The operation shows up in Central Admin

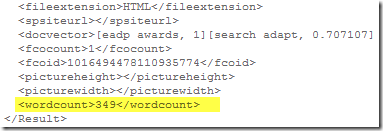

and the result xml when executing a search now shows the newly added wordcount property. Remember to add the column to the “Fetched properties” list in the Search Core Result web part.

The Visual Studio project for the pipeline stage as well as the pipelineextensibility.xml can be downloaded from my SkyDrive.

(This post is cross-posted from Tech and Me)